AI learns to touch 👋 🧣

Exploring Human-AI Perception Alignment in touch Experiences

We introduce perceptual alignment as a critical aspect of human-AI interaction, focusing on bridging the gap in tactile perception alignment between humans and AI.

Our research presents the first exploration of this alignment through the “textile hand” task across two studies, examining how well LLMs align with human touch experiences (Zhong et al., 2024) and (Zhong et al., 2024).

Study 1

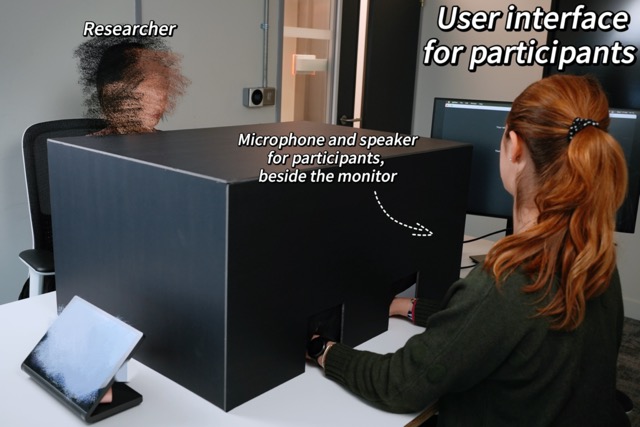

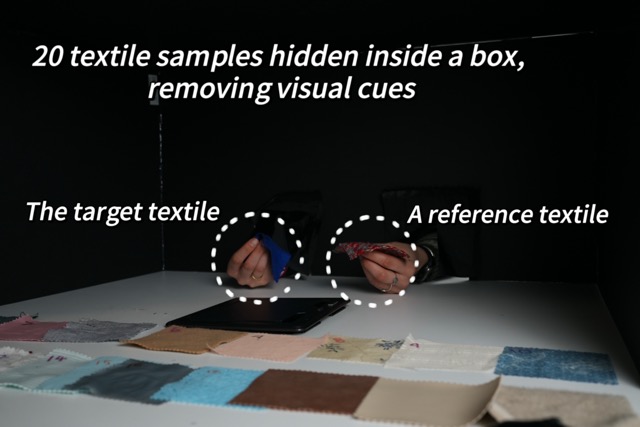

We assess how Multimodal LLMs interpret textile tactile qualities compared to human perception—a key challenge for online shopping environments. In person study with 30 participants. We evaluate models from GenAI families:

- OpenAI GPTs,

- Google Geminis,

- Anthropic Claude 3.

Check the paper Feeling Textiles through AI: An exploration into Multimodal Language Models and Human Perception Alignment

Study 2

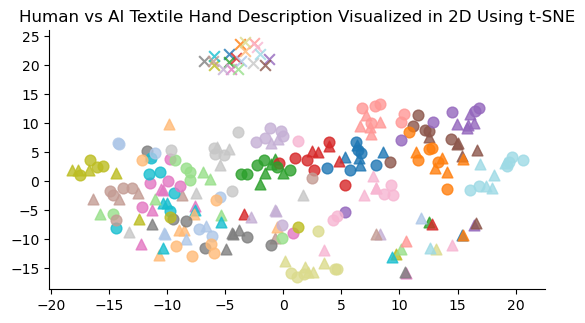

- We introduce a novel interactive task probes LLMs’ learned representations for human alignment.

- First study on alignment between human touch experiences and LLMs in embeddings.

- LLMs show perceptual biases, aligning better with certain textiles than others.

For details check our video and paper

References

2024

- Feeling Textiles through AI: An exploration into Multimodal Language Models and Human Perception AlignmentIn Proceedings of the 26th International Conference on Multimodal Interaction , 2024

- Exploring Human-AI Perception Alignment in Sensory Experiences: Do LLMs Understand Textile Hand?arXiv preprint arXiv:2406.06587, 2024